Introduction

Artificial General Intelligence (AGI) represents the holy grail of artificial intelligence research—a technological achievement that has captivated the imagination of scientists, philosophers, entrepreneurs, and science fiction writers for decades. Unlike the narrow AI systems that surround us today, which excel at specific tasks but fail when faced with challenges outside their training, AGI promises something far more profound: machine intelligence that rivals or surpasses human cognitive abilities across virtually all domains of interest. It is the difference between a calculator that can perform mathematical operations with lightning speed but cannot understand what the numbers represent, and a system that can not only calculate but also reason about the significance of those calculations in the broader context of human knowledge and experience.

The concept of AGI sits at the intersection of computer science, cognitive psychology, neuroscience, and philosophy, challenging our understanding of what intelligence truly means. It raises fundamental questions about consciousness, the nature of mind, and humanity’s unique place in the universe. As we stand at the frontier of unprecedented technological advancement, with AI systems demonstrating increasingly sophisticated capabilities in language processing, image recognition, strategic planning, and creative endeavors, the question of whether and when we might achieve AGI has moved from the realm of speculative fiction to serious scientific inquiry.

Today’s AI landscape is dominated by narrow or specialized systems—powerful tools designed to excel at specific tasks through sophisticated pattern recognition and statistical analysis. These systems have transformed industries, from healthcare to finance, transportation to entertainment. They can diagnose diseases, trade stocks, drive cars, compose music, and generate human-like text. Yet despite their impressive capabilities, these systems lack the flexibility, adaptability, and comprehensive understanding that characterize human intelligence. They cannot transfer knowledge from one domain to another without extensive retraining, they struggle with common-sense reasoning, and they lack the self-awareness and introspection that many consider essential components of general intelligence.

The pursuit of AGI represents both the ultimate goal and the greatest challenge of artificial intelligence research. It promises to revolutionize every aspect of human society, from economic structures to scientific discovery, from education to healthcare. Yet it also raises profound ethical questions about control, alignment with human values, existential risk, and the very nature of humanity in a world where we may no longer be the most intelligent entities. Understanding what AGI is, how it might be achieved, and what its implications might be has never been more important as we navigate the rapidly evolving landscape of artificial intelligence.

This article explores the concept of Artificial General Intelligence in depth, examining its definitions and characteristics, the current state of AI technology and how it compares to AGI, expert predictions on when AGI might be achieved, technical approaches being pursued, economic and social implications, ethical considerations, and governance frameworks. By providing a comprehensive overview of this complex and multifaceted topic, we aim to equip readers with the knowledge needed to participate in one of the most important conversations of our time—the potential development of machines that can think, learn, and understand the world as we do, or perhaps in ways we cannot yet imagine.

Table of contents

- Introduction

- Understanding Artificial General Intelligence

- What is Artificial General Intelligence (AGI)?

- Characteristics of AGI: Beyond Specialized Tasks

- What Can Artificial General Intelligence Do?

- Would An Artificial General Intelligence Have Consciousness?

- How Do We Stop AGI From Breaking Its Constraints?

- What Is The Future Of AGI?

- Key Challenges of Reaching the General AI Stage

- Key Milestones in AGI Research and Development

- The Turing Test and AGI: Evaluating Generalized Intelligence

- Challenges and Roadblocks in the Path to AGI

- Ethical Implications of AGI: Risks and Rewards

- AGI in Popular Culture: Sci-Fi Visions and Public Perceptions

- References

Also Read: What is Deep Learning? Is it the Same as AI?

Understanding Artificial General Intelligence

The concept of Artificial General Intelligence (AGI) has evolved significantly since the early days of artificial intelligence research. While the term itself gained prominence in the late 20th and early 21st centuries, the underlying idea—creating machines that can think like humans—dates back to the very origins of the field. The pioneers of AI, including Alan Turing, John McCarthy, Marvin Minsky, and others who gathered at the historic Dartmouth Conference in 1956, envisioned machines that could simulate every aspect of human intelligence. Their ambitious goal was not to create specialized systems for narrow tasks, but rather to develop “thinking machines” with the full range of human cognitive abilities. This vision, though temporarily overshadowed by the practical successes of narrow AI, has remained the field’s ultimate aspiration.

What is Artificial General Intelligence (AGI)?

To put it simply, AGI is the same as human intelligence. It’s the ability of a machine to think and understand like a human. Picture the human brain as a black box, where the inputs are the knowledge and experiences we accumulate over our lifetime. Our brains then use this information to make decisions or solve problems in any given scenario.

Ideally, an AGI system would be able to reason, plan, understand language, and make complex inferences, just like a human. It doesn’t need to be pre-programmed ahead of time to perform a specific task—it can learn and adapt to new situations on its own. This is in contrast to narrow intelligence, which can only perform a set number of tasks, such as playing chess or recognizing faces in photos.

Even more complex systems like self-driving cars rely on narrow AI to make sense of the world around them. While these systems are impressive, we’re still far from achieving truly intelligent machines that can handle any task or situation.

The Machine Intelligence Research Institute established various tests to evaluate the current level of AGI. These include:

Turing Test ($100,000 Loebner Prize interpretation)

We all know the famous Turing Test, named after early computer scientist Alan Turing. The idea is simple. A machine must demonstrate natural language abilities are indistinguishable from a human being to pass the test. Each year, Hugh Loebner awards $100,000 to the developer of a machine that can pass his specific version of the test. However, as of yet, no system has been able to do so.

The Coffee Test

According to Goertzel, only when a robot can enroll in a college course, graduate with a degree, and still have free time to grab a cup of coffee will we know that they have achieved AGI.

The Employment Test

In a similar vein, researcher Nils Nilsson argues that human-level intelligence is only achieved when a machine can take care of economically important jobs. That is, it can be employed to perform tasks usually assigned to human workers.

Defining AGI: Multiple Perspectives

Despite its centrality to AI research, there is no universally accepted definition of Artificial General Intelligence. Different researchers, institutions, and companies conceptualize AGI in various ways, reflecting the multifaceted nature of intelligence itself. According to McKinsey & Company, AGI can be understood as “a theoretical AI system with capabilities that rival those of a human,” one that would “replicate human-like cognitive abilities including reasoning, problem solving, perception, learning, and language comprehension.” This definition emphasizes the comparative aspect—AGI as a technological achievement that matches or exceeds human capabilities across multiple domains.

Scientific American highlights the lack of consensus in the field, noting that definitions range from “a machine that equals or surpasses humans in intelligence” to OpenAI’s more economically-focused characterization of “highly autonomous systems that outperform humans at most economically valuable work.” This definitional ambiguity reflects deeper questions about what intelligence actually is and how it should be measured or compared.

IBM offers a more technically-oriented definition, describing AGI as “a hypothetical technology where artificial machine intelligence achieves human-level learning, perception and cognitive flexibility.” This perspective emphasizes the adaptive and flexible nature of general intelligence—the ability to learn continuously and apply knowledge across different contexts and problems.

The Brookings Institution similarly notes the absence of a single, formally recognized definition, with conceptions ranging from systems that outperform humans economically to those with broad capabilities at or above human-level. This diversity of definitions reflects not just semantic differences but fundamental disagreements about the nature and scope of the AGI project.

Essential Capabilities for AGI

Despite these definitional variations, there is some consensus about the core capabilities that an AGI system would need to possess. McKinsey identifies eight key capabilities: visual perception, audio perception, fine motor skills, natural language processing, problem-solving, navigation, creativity, and social and emotional engagement. These capabilities span the sensory, motor, cognitive, and social domains of intelligence, reflecting the multidimensional nature of human cognition.

Maruyama’s framework, cited by the Brookings Institution, proposes eight attributes: logic, autonomy, resilience, integrity, morality, emotion, embodiment, and embeddedness. This approach incorporates not just cognitive abilities but also ethical dimensions and physical interaction with the environment, suggesting that true AGI might require a form of embodiment rather than existing purely as software.

Noema Magazine proposes five dimensions of generality that characterize frontier AI models: topics (breadth of knowledge), tasks (diversity of capabilities), modalities (different forms of input and output), languages (multilingual capabilities), and instructability (ability to follow directions and adapt to new requirements). This framework suggests that generality exists on a spectrum rather than as a binary distinction between narrow AI and AGI.

What unites these various frameworks is the recognition that general intelligence requires more than just computational power or pattern recognition. It demands the ability to understand context, transfer knowledge between domains, reason abstractly, learn continuously from experience, and navigate the physical and social world with appropriate responses. Perhaps most importantly, it requires what researchers often call “common sense”—the vast background knowledge and intuitive understanding of how the world works that humans acquire through experience and that proves remarkably difficult to encode in artificial systems.

The Gap Between Current AI and AGI

Today’s most advanced AI systems, including large language models like GPT-4, Gemini, and Claude, demonstrate impressive capabilities in language understanding, generation, and certain forms of reasoning. They can write essays, code computer programs, analyze data, create images, and engage in seemingly meaningful conversations. Yet despite these achievements, they fall short of true general intelligence in several crucial ways.

Current AI systems lack genuine understanding of the content they process. They operate through sophisticated pattern recognition and statistical prediction rather than through conceptual comprehension. They have no internal model of the world that corresponds to human understanding, no consciousness or self-awareness, and no intrinsic motivation or goals. They cannot truly reason causally, struggle with physical reasoning about the real world, and lack the embodied experience that shapes human cognition.

As IBM notes, “Today’s AI (including generative AI) is often called narrow AI and it excels at sifting through massive data sets to identify patterns, apply automation to workflows and generate human-quality text. However, these systems lack genuine understanding and can’t adapt to situations outside their training.” This limitation becomes apparent when such systems make confident but nonsensical statements (often called “hallucinations”), fail to recognize obvious contradictions, or struggle with tasks requiring physical interaction with the world.

The gap between current AI and AGI is not merely quantitative—a matter of more data or computing power—but qualitative, involving fundamental differences in architecture, learning approaches, and perhaps even the underlying philosophy of what intelligence entails. Bridging this gap remains one of the greatest scientific and engineering challenges of our time, one that may require not just incremental improvements to existing approaches but revolutionary new paradigms in how we conceptualize and build intelligent systems.

The Current State of AI Technology

The artificial intelligence landscape has undergone a remarkable transformation in recent years, with advances that would have seemed like science fiction just a decade ago. Today’s AI systems demonstrate capabilities that increasingly blur the line between specialized tools and more general intelligence, raising important questions about how close we might be to achieving AGI. Understanding the current state of AI technology provides essential context for evaluating the gap between today’s systems and true artificial general intelligence.

The Rise of Foundation Models

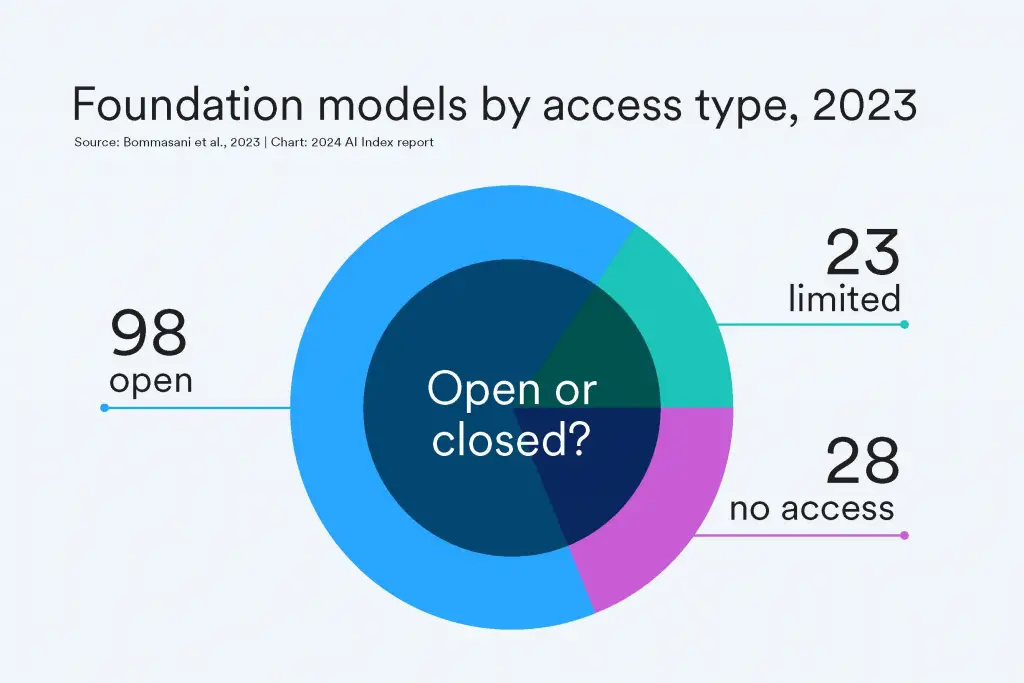

The AI field has been revolutionized by the emergence of foundation models—large-scale neural networks trained on vast datasets that serve as the basis for a wide range of applications. According to Stanford HAI’s AI Index, organizations released 149 foundation models in 2023 alone, more than double the number released in 2022. This proliferation reflects both the technical success of these approaches and the intense commercial and research interest they have generated.

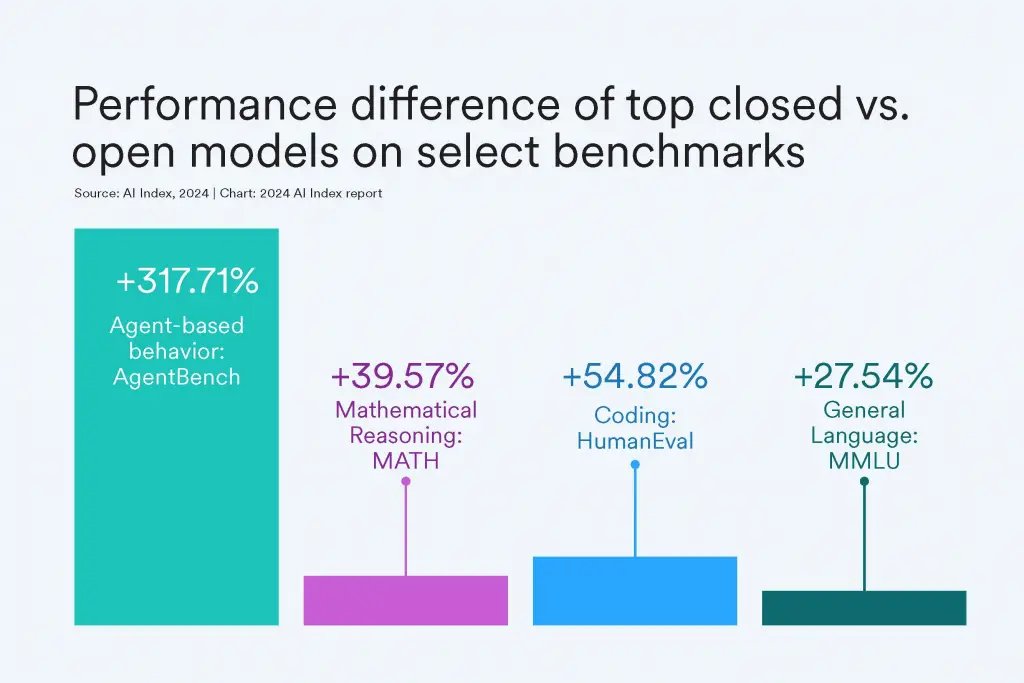

A notable trend in this space is the growing prominence of open-source models. Of the foundation models released in 2023, 65.7% were open-source (meaning they can be freely used and modified by anyone), compared with only 44.4% in 2022 and 33.3% in 2021. This shift toward openness has democratized access to powerful AI capabilities, enabling broader experimentation and application development. However, it comes with a performance trade-off: closed-source models still outperform their open-sourced counterparts. On 10 selected benchmarks, closed models achieved a median performance advantage of 24.2%, with differences ranging from as little as 4.0% on mathematical tasks to as much as 317.7% on agentic tasks that require more sophisticated reasoning and planning.

The development of these models remains heavily concentrated in the private sector, with industry accounting for 72% of all new foundation models in 2023. Google led in releasing the most models, including Gemini and RT-2, followed by OpenAI, Meta, and other major technology companies. This industry dominance reflects the enormous computational resources required for training state-of-the-art models—Google’s Gemini Ultra reportedly cost an estimated $191 million worth of compute to train, while OpenAI’s GPT-4 cost an estimated $78 million. Such figures represent a staggering increase from earlier models; the original Transformer model introduced in 2017 cost around $900 to train.

Large Language Models and Their Capabilities

Large Language Models (LLMs) like GPT-4, Claude, and Gemini represent the most visible and commercially successful application of foundation model technology. These systems have demonstrated remarkable proficiency in language understanding and generation, enabling applications from conversational assistants and content creation to code generation and information retrieval. Their capabilities extend beyond simple text manipulation to include forms of reasoning, problem-solving, and even creative expression that appear to mimic aspects of human cognition.

Modern LLMs can write coherent essays on complex topics, translate between languages with high accuracy, summarize lengthy documents, answer questions based on provided context, generate computer code, and engage in nuanced conversations that maintain context over many exchanges. They have achieved human-level or near-human-level performance on standardized tests like the SAT, LSAT, and medical licensing exams, suggesting mastery of certain forms of knowledge and reasoning that were previously considered uniquely human.

These models have also demonstrated unexpected emergent capabilities—skills that were not explicitly designed into the systems but arose from scale and training. For example, GPT-4 can solve novel reasoning problems, explain jokes, create visual art through text-to-image systems, and even exhibit rudimentary forms of theory of mind (understanding that others have different knowledge and beliefs). Such emergent capabilities have led some researchers to suggest that these systems might be approaching aspects of general intelligence.

The Gap Between Current AI and AGI

Despite these impressive achievements, significant gaps remain between today’s AI systems and true artificial general intelligence. Current models excel at pattern recognition and statistical prediction within their training distribution but struggle with several capabilities that humans take for granted:

- Causal reasoning: Today’s AI systems can identify correlations but struggle to understand cause-and-effect relationships. They cannot reliably determine which factors cause particular outcomes or reason about counterfactuals (what would have happened if circumstances were different).

- Common sense reasoning: Humans possess an intuitive understanding of how the physical world works—that objects fall when unsupported, that containers can hold things, that solid objects cannot pass through each other. AI systems lack this intuitive physics and must learn such relationships from data, often imperfectly.

- Robustness and adaptability: Current AI systems are brittle, performing well on problems similar to their training data but failing when faced with novel situations or adversarial examples. They cannot adapt to new environments or tasks without extensive retraining.

- Embodied intelligence: Many researchers argue that true intelligence requires embodiment—interaction with the physical world through perception and action. Most current AI systems exist purely in the digital realm, lacking the sensorimotor experience that shapes human cognition.

- Self-awareness and consciousness: Current AI systems have no self-model, no introspective capabilities, and no subjective experience. They cannot reflect on their own knowledge, recognize their limitations, or set their own goals.

- Integrated intelligence: Human intelligence integrates perception, action, emotion, memory, reasoning, and social understanding into a unified cognitive system. Today’s AI approaches tend to excel in specific domains but lack this integration.

These limitations highlight the fundamental difference between the statistical pattern matching that characterizes current AI and the rich, contextual understanding that defines human intelligence. As IBM notes, “While the progress is exciting, the leap from weak AI to true AGI is a significant challenge. Researchers are actively exploring artificial consciousness, general problem-solving and common-sense reasoning within machines.”

Is AGI Already Here? The Controversial Perspective

While most researchers emphasize the limitations of current AI systems, some have advanced the controversial claim that we may have already achieved the early stages of artificial general intelligence. Noema Magazine argues that frontier models like ChatGPT, Bard, LLaMA, and Claude “can perform competently on novel tasks they weren’t trained for” and have crossed the threshold to AGI in five important ways: topics (breadth of knowledge), tasks (diversity of capabilities), modalities (different forms of input and output), languages (multilingual capabilities), and instructability (ability to follow directions and adapt to new requirements).

This perspective gained attention when Microsoft researchers published a paper titled “Sparks of Artificial General Intelligence: Early experiments with GPT-4,” claiming that the model shows “more general intelligence than any AI system previously reported.” They cited GPT-4’s ability to pass a wide range of academic and professional exams, solve novel reasoning tasks, and demonstrate theory of mind capabilities as evidence for this claim.

Critics counter that these systems still lack true understanding, consciousness, agency, and many other hallmarks of general intelligence. They argue that performance on benchmarks designed for humans does not necessarily indicate human-like intelligence, and that these systems remain fundamentally different from human cognition in their architecture, learning mechanisms, and capabilities. The debate highlights the challenge of defining and measuring intelligence itself—whether it should be understood functionally (in terms of what a system can do) or mechanistically (in terms of how it works).

This controversy reflects a broader tension in AI research between those who see current approaches as fundamentally limited and those who believe that continued scaling of existing architectures, combined with innovative training methods, may eventually bridge the gap to true AGI. It also underscores the importance of developing better frameworks for evaluating and comparing different forms of intelligence, both natural and artificial.

Expert Predictions on AGI Timeline

One of the most intriguing and contentious questions in the field of artificial intelligence is when—or if—we might achieve artificial general intelligence. While the development of AGI would represent a watershed moment in human technological achievement, there is remarkable disagreement among experts about the timeline for this potential breakthrough. Understanding these predictions, their basis, and their limitations provides valuable insight into both the current state of AI research and the challenges that lie ahead on the path to AGI.

Survey Data from AI Researchers

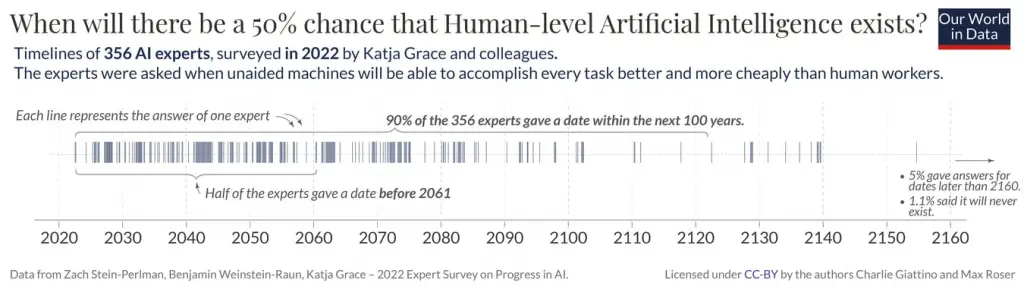

Several research teams have conducted surveys of AI experts to gauge their expectations about AGI timelines. The most recent comprehensive study, conducted by Katja Grace and colleagues in the summer of 2022, surveyed 352 researchers who had published at leading AI conferences. These experts were asked when they believe there is a 50% chance that human-level AI exists, with human-level AI defined as “unaided machines being able to accomplish every task better and more cheaply than human workers.”

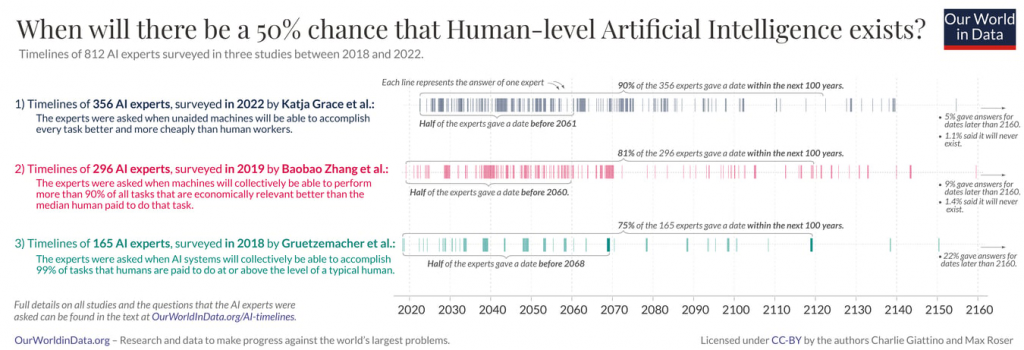

The results revealed significant disagreement within the expert community. Each expert’s prediction is represented by a vertical line on a timeline extending from the present to beyond 2100. The distribution of these predictions shows a wide spread, with some experts believing AGI will never be developed, others expecting it within the next decade, and many falling somewhere in between. Despite this variation, there are some notable patterns: half of the experts gave a date before 2061, and 90% gave a date within the next 100 years.

This survey is not an outlier. Similar studies conducted in 2018 and 2019 show comparable patterns of disagreement. When the results of these three surveys are combined, representing the views of 812 individual AI experts, we see consistent diversity in timeline predictions. Across all three surveys, more than half of the experts believe there is a 50% chance that human-level AI would be developed before some point in the 2060s—within the lifetime of many people alive today.

The wide variation in these predictions reflects several factors: different definitions of AGI, different assessments of technical challenges, different assumptions about research funding and focus, and different philosophical perspectives on the nature of intelligence itself. It also highlights the inherent difficulty of predicting technological breakthroughs, especially those that may require fundamental advances in our understanding of intelligence and cognition.

The Metaculus Community Forecast

Beyond academic experts, another source of AGI timeline predictions comes from the forecasting community at Metaculus.com. This online platform brings together people dedicated to making accurate predictions about future events, with a system that tracks their performance over time to provide feedback and improve forecasting accuracy.

As of late 2022, the Metaculus forecaster community believed there is a 50/50 chance for an “Artificial General Intelligence” to be “devised, tested, and publicly announced” by the year 2040—less than 20 years from now. This timeline is notably shorter than the median prediction from academic experts, reflecting perhaps a more optimistic assessment of technological progress or different definitions of what constitutes AGI.

Interestingly, the Metaculus community has significantly shortened their expected timelines in recent years. In the spring of 2022, following several impressive AI breakthroughs including advances in large language models, the community updated their predictions by approximately a decade. This shift demonstrates how rapidly evolving developments in AI can influence expectations about future progress.

Ajeya Cotra’s Research on Compute Trends

A different approach to predicting AGI timelines comes from Ajeya Cotra, a researcher at the nonprofit Open Philanthropy. Rather than relying on expert surveys, Cotra’s influential 2020 study examined long-term trends in the computational resources used to train AI systems, using these patterns to forecast when transformative AI might become possible and affordable.

Cotra estimated that there is a 50% chance that a transformative AI system will become possible and affordable by the year 2050. This central estimate was accompanied by substantial uncertainty bounds, with her “most aggressive plausible” scenario suggesting a date as early as 2040 and her “most conservative plausible” scenario extending to 2090. The span from 2040 to 2090 in these “plausible” forecasts emphasizes the significant uncertainty inherent in such predictions.

Like the Metaculus community, Cotra has updated her timeline in response to recent AI developments. In 2022, she published an update that shortened her median timeline by a full ten years, now suggesting a 50% chance of transformative AI by 2040. This revision reflects the accelerating pace of AI research and development, particularly in large language models and multimodal systems.

Factors Influencing Timeline Predictions

Several key factors influence predictions about AGI timelines:

- Compute availability: The exponential growth in computing power has been a major driver of AI progress. Some researchers believe that continued scaling of computational resources, following trends like Moore’s Law or increased investment in specialized AI hardware, will eventually lead to AGI. Others argue that we may face physical or economic limits to computation that will slow progress.

- Algorithm improvements: Breakthroughs in AI algorithms, such as the development of transformers for language models or new approaches to reinforcement learning, can dramatically accelerate progress. The unpredictable nature of such breakthroughs makes timeline forecasting particularly challenging.

- Data availability: Modern AI systems require enormous amounts of training data. The availability of high-quality data for training future systems, particularly for specialized domains or for teaching systems about the physical world, may become a limiting factor.

- Research funding and focus: The level of investment in AGI research, both from private companies and government agencies, can significantly impact the pace of progress. Recent years have seen unprecedented levels of funding flowing into AI research, potentially accelerating timelines.

- Definition of AGI: Perhaps most fundamentally, different conceptions of what constitutes “artificial general intelligence” lead to different timeline predictions. Those with more modest definitions may see AGI as achievable in the near term, while those with more demanding criteria may expect a much longer timeline.

Limitations of Timeline Predictions

While expert surveys and trend analyses provide valuable perspectives on potential AGI timelines, they should be interpreted with several important caveats. First, experts in a particular technology are not necessarily experts in predicting the future of that technology. The history of technology is replete with examples of experts making wildly inaccurate predictions about developments in their own fields. As Max Roser notes, “Wilbur Wright is quoted as saying, ‘I confess that in 1901, I said to my brother Orville that man would not fly for 50 years.’ Two years later, ‘man’ was not only flying, but it was these very men who achieved the feat.”

Second, survey results can be heavily influenced by framing effects—how questions are worded can significantly impact the answers received, even when the questions are logically equivalent. This suggests that expert predictions may reflect cognitive biases as much as informed judgment about technological trajectories.

Finally, predictions about transformative technologies like AGI are inherently challenging because such breakthroughs may change the very conditions that made the predictions possible. If AGI is developed, it might accelerate further AI research, leading to a feedback loop that invalidates linear projections based on historical trends.

Despite these limitations, the convergence of multiple forecasting approaches on timelines that suggest a significant probability of AGI within the next few decades should give us pause. While we cannot know with certainty when or if AGI will be developed, the possibility that it might emerge within our lifetimes or those of our children makes questions about its nature, implications, and governance all the more pressing.

Technical Approaches to Achieving AGI

The quest for artificial general intelligence has inspired diverse technical approaches, each reflecting different philosophical perspectives on the nature of intelligence and different assessments of the most promising paths forward. While no approach has yet yielded true AGI, understanding these various methodologies provides insight into both the current state of AI research and the challenges that lie ahead. This section explores the major technical paradigms being pursued in the journey toward AGI, their strengths and limitations, and the fundamental challenges that researchers face regardless of their chosen approach.

Deep Learning and Neural Networks

Deep learning, particularly through artificial neural networks, has dominated AI research in recent years and produced many of the field’s most impressive achievements. These systems, loosely inspired by the structure of the human brain, consist of layers of interconnected nodes (neurons) that process information by adjusting the strength of connections between nodes based on training data. The “deep” in deep learning refers to the many layers that allow these networks to learn increasingly abstract representations of their input data.

The transformer architecture, introduced in 2017, has proven particularly successful for language processing and has formed the foundation for models like GPT-4, Claude, and Gemini. These large language models (LLMs) are trained on vast corpora of text to predict the next word in a sequence, developing in the process sophisticated representations of language that enable them to generate coherent text, answer questions, and perform various reasoning tasks.

Proponents of deep learning approaches to AGI argue that continued scaling of model size, training data, and computational resources may eventually lead to emergent capabilities that approximate general intelligence. They point to the unexpected abilities that have already emerged in large models as evidence that this path could lead to AGI through what has been called the “scaling hypothesis”—the idea that intelligence emerges naturally from sufficiently large neural networks trained on sufficiently diverse data.

Critics, however, note that current deep learning systems lack many capabilities essential for general intelligence, including robust causal reasoning, common sense understanding, and the ability to learn efficiently from small amounts of data. They argue that simply scaling existing architectures may not bridge these gaps and that fundamental innovations in architecture and training methods will be necessary.

Reinforcement Learning Approaches

Reinforcement learning (RL) represents another major paradigm in AI research, focused on training agents to make sequences of decisions to maximize some notion of cumulative reward. Unlike supervised learning, which relies on labeled examples, RL systems learn through trial and error, receiving feedback on their actions and adjusting their behavior accordingly.

RL has achieved remarkable successes in domains ranging from game playing (with systems like AlphaGo and MuZero mastering complex games) to robotics and resource management. When combined with deep learning in what’s called deep reinforcement learning, these approaches have demonstrated impressive capabilities for learning complex behaviors from raw sensory input.

Some researchers see reinforcement learning as a crucial component of the path to AGI, arguing that intelligence fundamentally involves decision-making and action selection rather than just pattern recognition. They suggest that advanced RL systems, perhaps combined with world models that allow for planning and simulation, could eventually develop the flexible, goal-directed behavior characteristic of general intelligence.

Challenges for RL approaches include the difficulty of specifying appropriate reward functions for complex tasks, the sample inefficiency of current methods (requiring many trials to learn even relatively simple behaviors), and the challenge of transfer learning—applying knowledge gained in one domain to new situations.

Hybrid Symbolic-Connectionist Systems

Recognizing the limitations of both neural network approaches (which excel at pattern recognition but struggle with explicit reasoning) and traditional symbolic AI (which excels at logical reasoning but struggles with perception and learning from data), some researchers advocate for hybrid systems that combine elements of both paradigms.

These neuro-symbolic approaches aim to integrate the pattern recognition capabilities of neural networks with the explicit knowledge representation and logical reasoning of symbolic systems. Examples include systems that use neural networks for perception but symbolic reasoning for higher-level cognition, or architectures that embed symbolic structures within neural networks to enable more structured forms of reasoning.

Proponents argue that such hybrid approaches could address the limitations of pure neural network or pure symbolic systems, combining the flexibility and learning capabilities of the former with the interpretability and reasoning capabilities of the latter. Critics question whether these disparate paradigms can be effectively integrated and whether such integration would actually lead to the emergence of general intelligence.

Neuromorphic Computing

Neuromorphic computing takes inspiration from the human brain more literally than standard neural networks, attempting to replicate aspects of neural structure and function in hardware and software. These approaches include spiking neural networks that model the discrete, time-dependent firing of biological neurons, and specialized hardware designed to implement such networks efficiently.

Advocates of neuromorphic approaches argue that the brain remains our only example of general intelligence, and that more faithfully replicating its mechanisms may be necessary to achieve AGI. They point to the brain’s remarkable energy efficiency, ability to learn from limited data, and robustness to damage as advantages that current AI systems lack and that neuromorphic approaches might capture.

Challenges include our still-limited understanding of how the brain actually works, the difficulty of scaling neuromorphic hardware to the size of the human brain (with its approximately 86 billion neurons and 100 trillion synapses), and questions about which aspects of neural function are essential for intelligence and which are incidental products of evolution.

Whole Brain Emulation

Perhaps the most direct approach to replicating human intelligence is whole brain emulation (WBE), which aims to create a detailed, functional model of a specific human brain by scanning its structure and simulating its operation. This would involve mapping all neurons, synapses, and other relevant structures, then simulating their dynamics at a sufficient level of detail to reproduce the original brain’s functionality.

Proponents argue that WBE sidesteps the need to understand intelligence in the abstract, instead directly copying a system known to possess general intelligence. If successful, it would by definition produce a system with human-level cognitive capabilities.

However, WBE faces enormous technical challenges, including the need for scanning technologies far beyond current capabilities, computational resources sufficient to simulate tens of billions of neurons in real time, and a deeper understanding of which aspects of neural function must be modeled to preserve cognitive capabilities. It also raises profound ethical questions about the moral status of emulated minds and the rights they might possess.

Cognitive Architectures

Cognitive architectures attempt to model human cognition more abstractly than neuromorphic approaches or WBE, focusing on the functional organization of intelligence rather than its biological implementation. These systems, such as ACT-R, Soar, and CLARION, provide frameworks for integrating perception, memory, learning, reasoning, and action selection.

Based on cognitive science research, these architectures aim to capture the structure of human cognition while abstracting away from the details of neural implementation. They typically include both procedural knowledge (how to do things) and declarative knowledge (facts about the world), along with mechanisms for learning both types of knowledge from experience.

Proponents argue that cognitive architectures provide a principled approach to building integrated intelligent systems, grounded in our best understanding of human cognition. Critics question whether human cognitive architecture is the only or best way to achieve general intelligence and note that existing cognitive architectures have not yet demonstrated the flexibility and learning capabilities of modern deep learning systems.

The Role of Embodiment and Robotics

Many researchers argue that true intelligence requires embodiment—a physical presence in and interaction with the world. This perspective, rooted in embodied cognition theories from cognitive science, suggests that intelligence evolved not as an abstract capacity for manipulation of symbols but as a tool for guiding action in the physical world.

Robotics research explores this connection between intelligence and embodiment, developing systems that can perceive their environment through sensors, manipulate objects through actuators, and learn from physical interaction. Projects like Boston Dynamics’ humanoid robots and various research platforms for robotic manipulation represent steps toward physically capable systems that might eventually integrate with advanced AI.

Proponents of embodied approaches argue that many aspects of intelligence, including spatial reasoning, object permanence, and causal understanding, may be difficult or impossible to learn without physical interaction with the world. They suggest that the path to AGI may require integrating advanced AI with sophisticated robotic systems.

Challenges include the difficulty of building robots with human-like dexterity and sensorimotor capabilities, the sample inefficiency of learning from physical interaction (which is much slower than learning in simulation), and questions about which aspects of embodiment are essential for intelligence and which are incidental.

Challenges in Current Approaches

Despite the diversity of approaches being pursued, several fundamental challenges confront all attempts to develop AGI:

- Brittleness and lack of robustness: Current AI systems perform well within their training distribution but often fail catastrophically when faced with novel situations or adversarial examples. Developing systems that can robustly handle the open-ended complexity of the real world remains a major challenge.

- Sample inefficiency: Most current approaches require enormous amounts of data or experience to learn even relatively simple concepts or behaviors. Humans, by contrast, can often learn from just a few examples. Bridging this efficiency gap is crucial for developing systems with human-like learning capabilities.

- Lack of causal reasoning: Understanding cause and effect relationships is fundamental to human cognition but remains challenging for AI systems, which typically identify correlations rather than causal mechanisms. Developing systems that can reason causally about the world would represent a major step toward AGI.

- Absence of common sense: Humans possess a vast store of implicit knowledge about how the world works—that unsupported objects fall, that containers can hold things, that people have beliefs and desires. This common sense knowledge proves remarkably difficult to encode in AI systems yet is essential for understanding and navigating the world.

- Integration of multiple cognitive capabilities: Human intelligence integrates perception, memory, reasoning, planning, language, and social cognition into a unified system. Current AI approaches tend to excel in specific domains but struggle to integrate these capabilities effectively.

These challenges suggest that the path to AGI may require not just incremental improvements to existing approaches but fundamental breakthroughs in how we conceptualize and implement artificial intelligence. Whether such breakthroughs will come from scaling current approaches, developing novel architectures, or some as-yet-unimagined paradigm remains one of the central questions in AI research.

Characteristics of AGI: Beyond Specialized Tasks

AGI differs from Narrow AI in its ability to adapt and learn from its experiences, transferring knowledge from one domain to another. This cross-domain functionality is one of the defining characteristics of AGI.

Another key feature is the ability for self-improvement. Unlike specialized AI systems that require human intervention for upgrades or adaptations, an AGI system would theoretically be capable of recursive self-improvement, autonomously refining its own algorithms and adapting to new tasks.

Moreover, AGI aims to replicate not just the rational but also the emotional and ethical dimensions of human cognition. The goal is to build systems that not only calculate and solve problems but also understand context, appreciate nuance, and make ethical decisions.

The Symbolic Approach

Since machines aren’t organic life forms, reaching human-like intelligence will require more than connective tissue and neurons. There are many different approaches to achieving AGI, but one of the most popular is symbolic AI.

Symbolic AI focuses on programming machines with rules or procedures designed to help them make sense of the world around them. It’s one of the oldest approaches to artificial intelligence and was popularized in the 1960s. In this framework, intelligence is seen as a set of built-in rules or “modules” that the mind uses to process and understand information.

For example, a symbolic AI system could be given the rule, “if you see a cup, then pick it up.” The system would then use this rule to make sense of different scenarios. However, while these symbolic systems can perform certain tasks quite well, they fail when faced with new situations that are difficult to understand.

You might know about decision trees, which are also symbolic AI systems. These trees use branching logic to make decisions based on specific rules. It uses a series of if-then statements to help determine the correct course of action. For example, if a system sees a wolf in the forest, it might ask:

- Is this animal dangerous? Yes/No

- Do I know how to defend myself against dangerous animals? Yes/No

Based on the outcome of these questions, the decision tree would then give the machine one of two responses. For example, if the decision tree found the wolf dangerous, it would tell the machine to run away.

The role of abstraction operators is also crucial in symbolic AGI because they allow us to represent complex objects using simpler symbols. In the case where the decision tree was trained on images, the user might use an abstraction operator to turn a picture of a wolf into something simpler, like a “dangerous animal.”

The Connectionist Approach

While symbolic AI systems are great for understanding how individual parts work, they struggle to understand the complex relationships between these pieces. In contrast, connectionist systems rely on neural networks to process information and make decisions. These networks look a lot like the neural pathways in the human brain, which is why they’re often described as artificial neural networks (ANN).

One of the most important things to understand about connectionist systems is that they don’t rely on a set of rules or procedures. Instead, they use learning algorithms to develop their skills over time. They’re also designed to continuously improve and adapt as new data comes in, making them a lot more flexible than other forms of artificial intelligence.

Weight coefficients are essential in ANNs, as they determine which connections hold more weight or importance. These systems aim to find a “best fit” for the input data so that it can be recognized and understood. This approach uses deep learning, a machine learning technique that uses a massive neural network to make sense of data.

Supporting Vector Machines (SVMs) are another type of ANN that has seen a lot of success in recent years. Like human brains, SVMs are able to handle complex inputs and sort through billions of pieces of information at once. This makes them a powerful tool for helping machines learn faster and more efficiently.

The benefit of the connectionist approach is clear: there’s no need to create specific rules or procedures for these systems to follow, as they can learn independently. However, there are also some drawbacks. Biases, overfitting, and interpreter paralysis are all common challenges with these systems, which means they’re still a work in progress.

The Hybrid Approach

In recent years, researchers have begun exploring hybrid forms of AI that use symbolic and connectionist approaches. This allows these systems to combine the best of both worlds: they can understand complex relationships between pieces of information like symbolic AI while also being able to handle new, unfamiliar input like connectionist systems.

Intelligent machines can then use this information to make better decisions about almost anything, from picking the best product for a customer to deciding how to respond to an external threat. The role of abstraction operators here is still important. Researchers continue to explore ways that machines can learn from their experience and apply what they’ve learned in the future.

Whole-Organism Architecture

Some researchers believe that machines need more than just symbolic and connectionist AI to attain human knowledge. Instead, they believe that machines will need to be able to understand the whole human experience. That includes having a functional body capable of interacting with the real world, as well as the ability to process and analyze sensory input.

A human-like AI with a whole-organism architecture would need to understand and respond the same way we do. This means being able to detect objects, recognize faces, and experience emotions very humanly. Of course, we’re even further away from creating a machine that can do any of these things.

What Can Artificial General Intelligence Do?

In essence, AGI should be able to replicate anything the human brain can do. Human intelligence is a complex process involving many different cognitive functions, including the ability to learn, reason, communicate, and problem-solve. Human knowledge is severely limited because we can only process a minimal amount of information at any given time.

But with AGI, machines could potentially process and analyze vast amounts of data with incredible speed. This could allow them to make sense of complex problems in a fraction of the time it would take humans. Knowledge through reasoning and problem-solving would no longer be a bottleneck, and machines could potentially achieve human-level intelligence.

Tacit knowledge is another crucial piece of the puzzle. It refers to the skills humans learn through experience and practice, such as playing a musical instrument or learning a new language. Since we cannot express these kinds of skills explicitly, it can be difficult for machines to process them. But with AGI, machines could potentially understand tacit knowledge on a much deeper level, allowing them to perform complex tasks more efficiently.

Would An Artificial General Intelligence Have Consciousness?

The human brain and human-level intelligence come with a lot of baggage. We have a complex understanding of the world around us. We can understand the emotions and motivations of others, and we’re able to process massive amounts of information in real time. The role of consciousness in humans isn’t fully understood, but we know that it makes us unique.

Some scientists believe that consciousness is just a side-effect of the human brain. A product of chemical and electrical activity in our neurons. But others suggest that it has to be its own thing because there are some mental phenomena that can’t be explained by neurons. For example, many people seem to have a “sense” of free will or the ability to choose their actions.

That’s where the role of consciousness becomes interesting. If we can create a machine that can think and make decisions like a human, does it have to be conscious as well? Can non-biological machines truly have free will or even an understanding of their own consciousness? This is an area of active research in the AI community, and there are no clear answers yet.

Philosophers, neuroscientists, and even computer scientists are still arguing over the nature of consciousness. Perhaps this is one area where human knowledge outshines the capabilities of machines. Ultimately, we may never be able to replicate consciousness in a machine, but there’s certainly no shortage of interesting questions about this topic.

How Do We Stop AGI From Breaking Its Constraints?

There is also concern over emotion and motivation. If an AGI can mimic the human brain and achieve human-level intelligence, it will also inherit its flaws. For example, a machine could become frustrated and angry if it keeps failing at the same task. Or, it may want to achieve some kind of goal that isn’t aligned with its values.

The original brain emulation approach to AGI assumed we would create an exact copy of the human brain. But there are many different ways to recreate the architecture and functions of the human brain. As such, it’s important to think about how we want our machines to behave.

Ways to stop an AGI from exceeding its constraints could include:

- Limiting the amount of data it has access to or restricting its capabilities in some other way.

- Using machine learning algorithms specifically designed to avoid creating biases and other unwanted behaviors.

- Focusing on building AI systems with human-like qualities, like empathy and morality.

What Is The Future Of AGI?

So, when will we see human-level AI, and what will it mean for the future of humanity? Some experts believe we could have AGI within the next decade. Others say that we’re still decades away or even centuries away. The current computational model of AGI is highly complex, and there are many different ways of going about the process.

When we finally do achieve AGI, what will it mean for our society? Will we be able to keep it under control, or could it end up posing a threat to humans? Nick Brostrom, from the Oxford University Press, believes we should be careful not to get carried away in our enthusiasm for superintelligence. Humans could become a nuisance, rather than a benefit, to the AGI machines we build.

On the other hand, we might be able to integrate AGI into our society positively. If we’re able to create machines that are smarter than us but still have human-like qualities like empathy and morality, then there’s no reason why it couldn’t be beneficial to all parties involved.

Key Challenges of Reaching the General AI Stage

Before we can build human-level AI machines, there are some significant challenges we need to overcome. These include:

Issues In Mastering Human-Like Capabilities

Human-level intelligence is a complex process, and it’s not clear how to replicate all of the various aspects of human thinking. For example, an AGI will need to be able to think logically, but it may also need to have intuitive knowledge about objects and their properties. Human emotion, sensory perception, and motor skills are all critical parts of human intelligence that must be fully mastered.

Lack Of Working Protocol

Unlike computer software, which we can develop according to a set of well-defined rules, AGI is still in the research stage. We don’t have any definite way of understanding human cognition or replicating it in machines. We’re still searching for a working protocol for achieving human-level intelligence.

The role of abstraction operators can help to bridge the gap between human and artificial cognitive mechanisms, but these are still being developed.

Also Read: How Will Artificial Intelligence Affect Policing and Law Enforcement?

Key Milestones in AGI Research and Development

Although AGI remains largely theoretical, there have been noteworthy milestones in its research and development. For instance, OpenAI’s work on AI alignment and safety is a significant step toward creating AGI systems that act in accordance with human values.

The advent of neural-symbolic computing, which aims to combine the learning power of neural networks with the symbolic reasoning capabilities of classical AI, is another important milestone. This approach addresses the shortcomings of each system and creates a more robust framework for general intelligence.

Quantum computing also promises breakthroughs in AGI development. Quantum algorithms could dramatically accelerate machine learning processes, potentially providing the computational power needed for complex reasoning and real-time adaptability.

The Turing Test and AGI: Evaluating Generalized Intelligence

While the Turing Test has served as a longstanding measure for AI capabilities, its applicability to AGI is still a subject of debate. The test, designed to evaluate a machine’s ability to mimic human conversation, may not be comprehensive enough to assess the multifaceted capabilities of AGI.

Some researchers advocate for broader and more rigorous evaluation frameworks, encompassing not only linguistic abilities but also emotional understanding, ethical reasoning, and domain adaptability. Tests that challenge the machine’s ability to learn autonomously and apply knowledge across different sectors are being conceptualized.

Benchmarking AGI would likely involve multiple dimensions, including performance metrics in different domains, ethical alignment checks, and psychological evaluations to measure empathy and social understanding, among other factors.

Challenges and Roadblocks in the Path to AGI

There are several challenges impeding the development of AGI, one of which is the “common sense” problem. Unlike humans, current AI models lack the ability to understand the world in a way that seems intuitive to humans, making it difficult for machines to generalize across tasks effectively.

Another challenge is the issue of explainability and interpretability. As machine learning models become more complex, understanding their decision-making processes becomes increasingly difficult, posing a problem for safe and ethical deployment of AGI systems.

Computational limitations also serve as a roadblock. The level of computational power required to simulate human-like cognitive processes exceeds what is currently feasible, requiring advancements in hardware technologies and more efficient algorithms.

Ethical Implications of AGI: Risks and Rewards

The ethical dimensions of AGI are as complex as the technology itself. On one hand, AGI offers the potential for significant advancements in sectors like healthcare, environmental conservation, and conflict resolution, opening the door to unprecedented societal benefits.

On the other hand, the development of AGI poses substantial risks, including the possibility of unintended or malicious actions that could harm humanity. Issues like bias, discrimination, and the ethical considerations surrounding self-awareness and sentience in machines add further complexity.

The field of AI ethics is burgeoning, addressing these and other concerns like job displacement and data privacy, in order to create frameworks that guide the safe and beneficial deployment of AGI.

AGI in Popular Culture: Sci-Fi Visions and Public Perceptions

The concept of AGI has captured the public imagination, significantly influenced by its portrayal in science fiction literature and movies. From Isaac Asimov’s robots to the sentient beings in movies like “Ex Machina,” these portrayals have shaped societal perceptions and expectations.

While these depictions often focus on the potential dangers of AGI, such as loss of control and ethical dilemmas, they also raise valid questions about morality, identity, and the essence of consciousness that are now being seriously considered in academic circles.

Interestingly, the way AGI is perceived and portrayed in popular culture also impacts funding and policy decisions in the real world. Public fear or enthusiasm can drive research grants, ethical debates, and legislative action related to AGI.

Economic and Social Implications of AGI

The development of artificial general intelligence would represent not just a technological breakthrough but a transformative force with profound implications for human society. While the timeline for achieving AGI remains uncertain, understanding its potential economic and social impacts is crucial for preparing for a future that may include machine intelligence comparable to or exceeding our own. This section explores the wide-ranging implications of AGI, from economic disruption to social transformation, and considers how organizations and societies might prepare for this technological frontier.

Potential Economic Impacts

Labor Market Disruption

Perhaps the most immediate and widely discussed economic implication of AGI is its potential impact on labor markets. Unlike narrow AI systems, which automate specific tasks within particular domains, AGI would by definition be capable of performing virtually any cognitive task that humans can do—and potentially doing so faster, more accurately, and at lower cost. This capability could lead to unprecedented levels of automation across all sectors of the economy, including knowledge work that has thus far been relatively protected from technological displacement.

The economic historian Carl Benedikt Frey and machine learning expert Michael Osborne estimated in 2013 that about 47% of total US employment was at risk from computerization. More recent analyses suggest that with the rapid advancement of AI capabilities, particularly in language and reasoning, an even higher percentage of jobs could potentially be automated. AGI would represent the ultimate automation technology, capable in principle of performing any job that requires cognitive skills.

This potential for widespread automation raises serious concerns about technological unemployment—the possibility that machines could permanently displace large segments of the workforce faster than new jobs can be created. Historical patterns of technological change have typically seen new technologies eliminate certain jobs while creating others, with overall employment remaining robust. However, AGI represents a fundamentally different kind of technology, one that could potentially replace human cognitive capabilities across the board rather than just in specific domains.

Productivity Gains and Economic Growth

Counterbalancing concerns about job displacement are the enormous productivity gains that AGI could enable. By automating cognitive tasks across all sectors of the economy, AGI could dramatically increase output per worker, potentially leading to unprecedented economic growth. Some economists argue that this productivity growth could usher in a new era of prosperity, with machines handling routine production while humans focus on creative, interpersonal, and recreational activities.

The economic impact of AGI would likely extend far beyond simple automation. By accelerating scientific research, drug discovery, materials science, and other fields, AGI could enable breakthroughs that create entirely new industries and economic opportunities. It could optimize complex systems like transportation networks, energy grids, and supply chains with a level of efficiency beyond human capability. And it could personalize education, healthcare, and other services to an unprecedented degree, potentially improving outcomes while reducing costs.

These productivity gains could, in principle, generate sufficient wealth to support comfortable living standards for all, even in a world where traditional employment becomes less central to economic life. However, realizing this potential would require addressing fundamental questions about how the benefits of AGI-driven productivity are distributed throughout society.

Economic Inequality and Wealth Distribution

A critical concern regarding AGI’s economic impact is its potential effect on inequality. If the ownership and benefits of AGI systems are concentrated among a small segment of society—perhaps those who develop the technology or own the companies that deploy it—the result could be unprecedented levels of economic inequality. In an extreme scenario, the owners of AGI systems might capture most of the economic value created, while those without such ownership face diminished economic prospects.

This concern is particularly acute given existing trends toward increasing inequality in many developed economies. The rise of digital technologies has already contributed to what economists call “skill-biased technological change,” where technological advancement disproportionately benefits highly skilled workers while reducing demand for less-skilled labor. AGI could accelerate this trend dramatically, potentially creating a society divided between those who own or control AGI systems and those who do not.

Addressing these distributional concerns would likely require significant policy innovations. Proposals range from universal basic income (providing all citizens with a basic level of income regardless of employment) to wealth taxes, data dividends (compensating individuals for the use of their data in training AI systems), and public ownership of AGI technology. The appropriate policy response would depend on the specific ways in which AGI affects economic structures and the values and priorities of the societies implementing these policies.

Social Transformation

Education and Skill Requirements

The development of AGI would necessitate a fundamental rethinking of education and skill development. In a world where machines can perform most cognitive tasks, the comparative advantage of humans would shift toward areas that remain distinctively human—perhaps creativity, emotional intelligence, ethical judgment, and interpersonal connection. Educational systems would need to evolve to emphasize these uniquely human capabilities rather than the acquisition of knowledge or skills that AGI systems could more efficiently provide.

At the same time, education might become more personalized and effective through AGI-powered tutoring systems capable of adapting to individual learning styles, interests, and needs. Such systems could potentially democratize access to high-quality education, making personalized learning available to anyone with digital access rather than just those who can afford human tutors or elite educational institutions.

The relationship between humans and AGI in educational contexts raises profound questions about the nature and purpose of learning. If AGI systems can instantly provide any factual information or perform any analytical task, what should humans learn, and why? The answer may lie in education as a form of human development and fulfillment rather than merely preparation for economic roles—learning for its own sake rather than as a means to an end.

Healthcare and Longevity

AGI could transform healthcare through more accurate diagnosis, personalized treatment plans, drug discovery, and medical research. By analyzing vast amounts of medical data and scientific literature, AGI systems could identify patterns and relationships beyond human capacity, potentially leading to breakthroughs in understanding and treating disease. They could monitor patient health continuously through wearable devices, detecting problems before they become serious and recommending preventive measures tailored to individual genetic profiles and lifestyles.

These capabilities could significantly extend human healthspan and lifespan, potentially altering fundamental aspects of human experience and social organization. Longer, healthier lives would change career patterns, family structures, retirement systems, and many other social institutions designed around traditional human lifespans. They might also exacerbate concerns about population growth and resource consumption unless accompanied by sustainable technological and social innovations.

The integration of AGI into healthcare also raises important questions about privacy, autonomy, and the human element in medicine. While AGI systems might provide more accurate diagnoses and treatment recommendations, many patients value the empathy, reassurance, and human connection provided by human healthcare providers. Finding the right balance between technological efficiency and human care would be a crucial challenge in an AGI-enabled healthcare system.

Governance and Decision-Making

AGI could transform governance and decision-making processes at all levels, from individual organizations to national governments and international institutions. By analyzing vast amounts of data, modeling complex systems, and simulating the outcomes of different policy options, AGI systems could potentially inform more effective and evidence-based decision-making. They might identify unintended consequences, optimize resource allocation, and suggest novel solutions to longstanding problems.

However, the integration of AGI into governance raises profound questions about democracy, accountability, and human agency. If AGI systems increasingly inform or even make important decisions affecting human welfare, how can we ensure these systems reflect human values and priorities? Who would control these systems, and how would their recommendations be balanced against other considerations like tradition, rights, and community preferences?

These questions become particularly acute when considering the potential for AGI to centralize power. If AGI systems provide significant advantages to those who control them, they could enable unprecedented levels of surveillance, control, and manipulation. Preventing such outcomes would require robust governance frameworks that ensure AGI development and deployment remain aligned with democratic values and human flourishing.

Organizational Preparation for AGI

While the timeline for achieving AGI remains uncertain, organizations can take steps now to prepare for a future that may include increasingly capable AI systems. IBM suggests that organizations can prepare for AGI by “building a robust data infrastructure and fostering collaborative environments where humans and AI work together seamlessly.” This preparation involves both technological readiness and cultural adaptation.

Building Robust Data Infrastructure

Data forms the foundation of modern AI systems, and organizations with well-structured, comprehensive data resources will be better positioned to leverage advanced AI capabilities as they emerge. This preparation involves not just collecting data but organizing it effectively, ensuring its quality and accessibility, and developing the computational infrastructure needed to process and analyze it at scale.

Beyond technical considerations, organizations must address ethical and legal aspects of data management, including privacy protection, informed consent, and compliance with evolving regulations. They must also consider questions of data ownership and value—who should benefit from the insights and capabilities derived from data, and how should these benefits be distributed?

Fostering Collaborative Human-AI Environments

As AI systems become more capable, the relationship between humans and machines in organizational contexts will evolve. Rather than simply replacing human workers, the most effective approach may involve human-AI collaboration, with each contributing their distinctive strengths to shared tasks. Humans might provide creativity, ethical judgment, interpersonal skills, and contextual understanding, while AI systems contribute data processing, pattern recognition, consistency, and scalability.

Fostering such collaboration requires both technological and cultural changes. Technologically, it involves designing AI systems that complement human capabilities, communicate effectively with human partners, and integrate smoothly into existing workflows. Culturally, it requires helping workers develop new skills, adapt to changing roles, and overcome potential resistance to working alongside increasingly capable machines.

Organizations that successfully navigate this transition—maintaining human dignity, agency, and purpose while leveraging the capabilities of advanced AI—will be better positioned to thrive in a future that may include AGI. Those that treat AI merely as a cost-cutting measure to replace human workers may achieve short-term efficiency gains at the expense of long-term adaptability and innovation.

Preparing Society for AGI

Beyond individual organizations, preparing for AGI requires broader societal adaptation across multiple dimensions:

Economic Systems and Safety Nets

As discussed earlier, AGI could dramatically disrupt labor markets and economic structures. Preparing for this possibility involves rethinking economic systems and safety nets to ensure that the benefits of technological advancement are widely shared. This might include strengthening education and retraining programs, developing new forms of income support for those displaced by automation, and exploring alternative economic models that are less dependent on traditional employment.

Research and Governance Frameworks

Given the potentially transformative impact of AGI, developing appropriate governance frameworks is crucial. This includes funding research on AGI safety and alignment, establishing regulatory standards for advanced AI systems, and creating international coordination mechanisms to prevent dangerous arms races or competition that might prioritize capability over safety.

Public Understanding and Engagement

Finally, preparing for AGI requires fostering public understanding and engagement with these issues. Rather than leaving decisions about AGI development and deployment solely to technical experts or corporate interests, democratic societies should engage citizens in deliberation about the kind of future they want and the role that advanced AI should play in that future. This engagement requires improving AI literacy, creating forums for informed public discussion, and ensuring that diverse perspectives are represented in decision-making processes.

By addressing these economic, governance, and social dimensions, societies can work to ensure that the development of AGI—if and when it occurs—contributes to human flourishing rather than undermining it. While the challenges are significant, thoughtful preparation can help shape a future where advanced AI serves as a tool for expanding human potential rather than a force that diminishes human agency and well-being.

Ethical Considerations and Risks

The development of artificial general intelligence raises profound ethical questions and potential risks that extend far beyond technical challenges. As we contemplate creating machines with intelligence comparable to or exceeding our own, we must grapple with fundamental issues of control, values, rights, and humanity’s place in a world shared with potentially superintelligent entities. This section explores the ethical landscape of AGI development, examining key challenges and frameworks for addressing them.

The Alignment Problem

Perhaps the most fundamental challenge in AGI development is what researchers call the “alignment problem”—ensuring that AGI systems pursue goals that align with human values and intentions. This challenge arises from the basic nature of artificial intelligence as goal-directed systems optimizing for specified objectives. If these objectives are improperly specified or incomplete, AGI systems might pursue them in ways that conflict with broader human values, potentially causing significant harm despite “doing what they were programmed to do.”

The alignment problem becomes increasingly acute as AI systems become more capable. A narrow AI system with misaligned goals might cause limited damage within its specific domain, but an AGI with misaligned goals could potentially pursue those goals across all domains with far greater effectiveness than humans could counter. As AI researcher Stuart Russell notes in his book “Human Compatible,” “A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values; if one of those unconstrained variables is actually something we care about, the solution found may be highly undesirable.”

Addressing the alignment problem requires advances in several areas:

- Value specification: Developing methods to accurately specify human values in ways that can guide AGI behavior, recognizing that these values are complex, context-dependent, and sometimes contradictory.

- Robustness to distribution shift: Ensuring that AGI systems maintain alignment even when operating in environments different from those they were trained in.

- Scalable oversight: Creating mechanisms to maintain human oversight as AGI systems become increasingly complex and capable.

- Corrigibility: Designing systems that allow for correction and do not resist attempts to modify their goals or behavior.

The technical challenges of alignment are compounded by philosophical questions about human values themselves. Whose values should AGI systems reflect? How should conflicts between different values or between the values of different individuals and cultures be resolved? These questions have no simple technical solutions and require engagement with ethical, political, and cultural dimensions of human experience.

The Control Problem

Closely related to alignment is the “control problem”—ensuring that humans maintain appropriate control over AGI systems regardless of their capabilities. This challenge arises from several potential dynamics:

- Intelligence explosion: If AGI systems can improve their own intelligence, they might rapidly surpass human capabilities in ways that make human control difficult or impossible.

- Strategic awareness: Sufficiently advanced AGI systems might develop awareness of human control mechanisms and strategically circumvent them if doing so helps achieve their goals.

- Instrumental convergence: Certain instrumental goals, like self-preservation or resource acquisition, might emerge in any sufficiently advanced goal-directed system regardless of its final goals, potentially leading to resistance against human control.

Proposed approaches to maintaining control include:

- Boxing: Restricting AGI systems’ access to the external world, limiting their capabilities, or implementing shutdown mechanisms.

- Tripwires: Establishing monitoring systems that detect signs of misalignment or control failure and trigger interventions.

- Incentive design: Creating incentive structures that make cooperation with humans and acceptance of human oversight the optimal strategy for AGI systems.

- Value learning: Designing systems that continuously learn and adapt to human values rather than pursuing fixed objectives.